Cluster membership with the SWIM protocol and the memberlist library

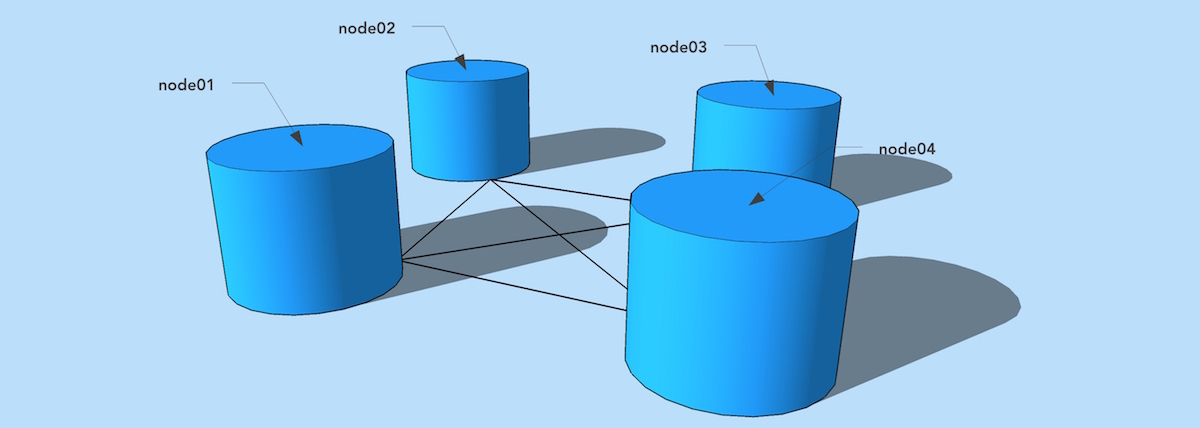

Toy example of cluster membership with SWIM. Written in Golang.

One of the hallmarks of a distributed system is the coordination protocol, often referred to as a gossip protocol. Without a single machine in charge of the management of a group of machines, how do they communicate, and identify as being a part of the same group? The answer is invariably some type of gossip protocol. The SWIM protocol is emerging a standard protocol to follow node membership. This post is about how to configure and play around with Hashicorp’s implementation of the SWIM protocol in a Golang library called memberlist. (github.com/hashicorp/memberlist)

If you’d like to cut to the chase, and download the source code for this post, you can go straight to github.com/vogtb/writing-swim-member. If you’re interested in how I got there, then keep reading!

The hashicorp/memberlist library uses the SWIM protocol to identify which nodes belong to a cluster, and, in the event of a network partition or node failure, which nodes are no longer apart of the cluster. It does this by having each node poll all other nodes in a cluster to see if they’re still alive. If a node is unable to reach a member of the cluster, it asks other nodes if the down node is still alive. If it’s able to reach it, then the cluster is still intact, even though maybe the first node can’t reach the second one. If no other nodes in the cluster are able to reach the downed-node, it is marked as “suspect” (suspected of being dead) and given a certain amount of time to respond before it is marked dead, and subsequently removed from the cluster.

While there are certainly better explanations of the SWIM protocol, that’s as much as you need to know to follow the rest of this post. To observe how SWIM works, let’s write a simple Go app that starts up, joins a cluster, and prints all the members in the membership list.

package main

import (

"log"

"time"

"github.com/hashicorp/memberlist"

)

func main() {

log.Println("Starting node.")

config := memberlist.DefaultLocalConfig()

list, err := memberlist.Create(config)

if err != nil {

log.Println("Failed to create memberlist: " + err.Error())

}

// Join

clusterCount, err := list.Join([]string{"localhost"})

log.Println("Joing cluster of size", string(clusterCount))

if err != nil {

log.Println("Failed to join cluster: " + err.Error())

}

// Every two seconds, log the members list

for {

time.Sleep(time.Second * 2)

log.Println("Members:")

for _, member := range list.Members() {

log.Printf(" %s %s\n", member.Name, member.Addr)

}

}

}

To watch this in action we can run it on our local machine like this:

2016/10/26 18:42:32 Starting node.

2016/10/26 18:42:32 [DEBUG] memberlist: Initiating push/pull sync with: [::1]:7946

2016/10/26 18:42:32 [DEBUG] memberlist: TCP connection from=[::1]:60158

2016/10/26 18:42:32 [DEBUG] memberlist: TCP connection from=127.0.0.1:60159

2016/10/26 18:42:32 [DEBUG] memberlist: Initiating push/pull sync with: 127.0.0.1:7946

2016/10/26 18:42:32 Joing cluster of size

2016/10/26 18:42:34 Members:

2016/10/26 18:42:34 xwing.local 127.0.0.1

2016/10/26 18:42:36 Members:

2016/10/26 18:42:36 xwing.local 127.0.0.1

2016/10/26 18:42:38 Members:

2016/10/26 18:42:38 xwing.local 127.0.0.1

2016/10/26 18:42:40 Members:

2016/10/26 18:42:40 xwing.local 127.0.0.1

2016/10/26 18:42:42 Members:

2016/10/26 18:42:42 xwing.local 127.0.0.1

But you’ll notice that there’s only one node in the member list. Because the library uses hostname (xwing.local) and IP (127.0.0.1) to identify a node, we need several different machines to properly demonstrate the protocol. Using vagrant we can set up four hosts: node01, node02, node03, and node04. Since the focus of this post isn’t vagrant, I’ll skip a lot of the details and just let you know that:

- the four instances can run the compiled Go binary

- they have DNS resolution to one another

- they have the following configurations:

192.168.50.6 node01

192.168.50.10 node02

192.168.50.7 node03

192.168.50.11 node04

Because the memberlist library depends on DNS resolution and public IP, and we have a simple vagrant configuration in our example, I’m going to cheat a little bit and add a hostname-to-IP map so we can set each member’s IP address directly in the configuration. So now our Go app looks like this:

package main

import (

"log"

"os"

"time"

"github.com/hashicorp/memberlist"

)

func main() {

log.Println("Starting node.")

hostname, _ := os.Hostname()

// Hard code hostname and IP address

var hostMap = make(map[string]string)

hostMap["node01"] = "192.168.50.6"

hostMap["node02"] = "192.168.50.10"

hostMap["node03"] = "192.168.50.7"

hostMap["node04"] = "192.168.50.11"

config := memberlist.DefaultLocalConfig()

config.BindAddr = hostMap[hostname]

list, err := memberlist.Create(config)

if err != nil {

log.Println("Failed to create memberlist: " + err.Error())

}

// Join

clusterCount, err := list.Join([]string{hostMap["node01"]})

log.Println("Joing cluster of size", string(clusterCount))

if err != nil {

log.Println("Failed to join cluster: " + err.Error())

}

// Every two seconds, log the members list

for {

time.Sleep(time.Second * 2)

log.Println("Members:")

for _, member := range list.Members() {

log.Printf(" %s %s\n", member.Name, member.Addr)

}

}

}

When we start the node we tell it one of the existing nodes to join, in this case node01. So if we start our app on node01 let’s see what happens.

vagrant@node01:~$ ./writing-swim-member.linux.amd64

2016/10/26 23:45:12 Starting node.

2016/10/26 23:45:12 [DEBUG] memberlist: TCP connection from=192.168.50.6:38569

2016/10/26 23:45:12 [DEBUG] memberlist: Initiating push/pull sync with: 192.168.50.6:7946

2016/10/26 23:45:12 Joing cluster of size

2016/10/26 23:45:14 Members:

2016/10/26 23:45:14 node01 192.168.50.6

2016/10/26 23:45:16 Members:

2016/10/26 23:45:16 node01 192.168.50.6

2016/10/26 23:45:18 Members:

2016/10/26 23:45:18 node01 192.168.50.6

So it starts up, joins itself, and starts it’s own cluster of one. Now let’s start node02 and see what happens.

node01

vagrant@node01:~$ ./writing-swim-member.linux.amd64

2016/10/26 23:45:41 Starting node.

2016/10/26 23:45:41 [DEBUG] memberlist: TCP connection from=192.168.50.6:38570

2016/10/26 23:45:41 [DEBUG] memberlist: Initiating push/pull sync with: 192.168.50.6:7946

2016/10/26 23:45:41 Joing cluster of size

2016/10/26 23:45:43 Members:

2016/10/26 23:45:43 node01 192.168.50.6

2016/10/26 23:45:45 Members:

2016/10/26 23:45:45 node01 192.168.50.6

2016/10/26 23:45:47 Members:

2016/10/26 23:45:47 node01 192.168.50.6

2016/10/26 23:45:49 Members:

2016/10/26 23:45:49 node01 192.168.50.6

2016/10/26 23:45:49 [DEBUG] memberlist: TCP connection from=192.168.50.10:36741

2016/10/26 23:45:51 Members:

2016/10/26 23:45:51 node02 192.168.50.10

2016/10/26 23:45:51 node01 192.168.50.6

2016/10/26 23:45:53 Members:

2016/10/26 23:45:53 node02 192.168.50.10

2016/10/26 23:45:53 node01 192.168.50.6

2016/10/26 23:45:55 Members:

2016/10/26 23:45:55 node01 192.168.50.6

2016/10/26 23:45:55 node02 192.168.50.10

2016/10/26 23:45:57 Members:

2016/10/26 23:45:57 node02 192.168.50.10

2016/10/26 23:45:57 node01 192.168.50.6

2016/10/26 23:45:57 [DEBUG] memberlist: Initiating push/pull sync with: 192.168.50.10:7946

2016/10/26 23:45:59 Members:

2016/10/26 23:45:59 node01 192.168.50.6

2016/10/26 23:45:59 node02 192.168.50.10

2016/10/26 23:46:01 Members:

2016/10/26 23:46:01 node02 192.168.50.10

2016/10/26 23:46:01 node01 192.168.50.6

2016/10/26 23:46:03 Members:

2016/10/26 23:46:03 node01 192.168.50.6

2016/10/26 23:46:03 node02 192.168.50.10

node02

vagrant@node02:~$ ./writing-swim-member.linux.amd64

2016/10/26 23:45:49 Starting node.

2016/10/26 23:45:49 [DEBUG] memberlist: Initiating push/pull sync with: 192.168.50.6:7946

2016/10/26 23:45:49 Joing cluster of size

2016/10/26 23:45:51 Members:

2016/10/26 23:45:51 node02 192.168.50.10

2016/10/26 23:45:51 node01 192.168.50.6

2016/10/26 23:45:53 Members:

2016/10/26 23:45:53 node01 192.168.50.6

2016/10/26 23:45:53 node02 192.168.50.10

2016/10/26 23:45:55 Members:

2016/10/26 23:45:55 node01 192.168.50.6

2016/10/26 23:45:55 node02 192.168.50.10

2016/10/26 23:45:57 [DEBUG] memberlist: TCP connection from=192.168.50.6:55391

2016/10/26 23:45:57 Members:

2016/10/26 23:45:57 node01 192.168.50.6

2016/10/26 23:45:57 node02 192.168.50.10

2016/10/26 23:45:59 Members:

2016/10/26 23:45:59 node02 192.168.50.10

2016/10/26 23:45:59 node01 192.168.50.6

2016/10/26 23:46:01 Members:

2016/10/26 23:46:01 node01 192.168.50.6

2016/10/26 23:46:01 node02 192.168.50.10

We see that node02 makes a connection to node01, and they add each other to the members list. We can do this with a third and fourth node02, until each node is outputting something like this.

2016/10/26 23:47:46 Members:

2016/10/26 23:47:46 node03 192.168.50.7

2016/10/26 23:47:46 node01 192.168.50.6

2016/10/26 23:47:46 node02 192.168.50.10

2016/10/26 23:47:46 node04 192.168.50.11

Now what happens if we kill the Go app on node03?

node01

2016/10/26 23:48:50 Members:

2016/10/26 23:48:50 node01 192.168.50.6

2016/10/26 23:48:50 node02 192.168.50.10

2016/10/26 23:48:50 node03 192.168.50.7

2016/10/26 23:48:51 [DEBUG] memberlist: Failed UDP ping: node03 (timeout reached)

2016/10/26 23:48:52 [INFO] memberlist: Suspect node03 has failed, no acks received

2016/10/26 23:48:52 Members:

2016/10/26 23:48:52 node03 192.168.50.7

2016/10/26 23:48:52 node01 192.168.50.6

2016/10/26 23:48:52 node02 192.168.50.10

2016/10/26 23:48:52 [DEBUG] memberlist: Failed UDP ping: node03 (timeout reached)

2016/10/26 23:48:54 [INFO] memberlist: Suspect node03 has failed, no acks received

2016/10/26 23:48:54 Members:

2016/10/26 23:48:54 node03 192.168.50.7

2016/10/26 23:48:54 node01 192.168.50.6

2016/10/26 23:48:54 node02 192.168.50.10

2016/10/26 23:48:54 [INFO] memberlist: Marking node03 as failed, suspect timeout reached (1 peer confirmations)

2016/10/26 23:48:56 Members:

2016/10/26 23:48:56 node01 192.168.50.6

2016/10/26 23:48:56 node02 192.168.50.10

node02

2016/10/26 23:48:49 Members:

2016/10/26 23:48:49 node01 192.168.50.6

2016/10/26 23:48:49 node02 192.168.50.10

2016/10/26 23:48:49 node03 192.168.50.7

2016/10/26 23:48:50 [DEBUG] memberlist: Failed UDP ping: node03 (timeout reached)

2016/10/26 23:48:51 [INFO] memberlist: Suspect node03 has failed, no acks received

2016/10/26 23:48:51 Members:

2016/10/26 23:48:51 node01 192.168.50.6

2016/10/26 23:48:51 node02 192.168.50.10

2016/10/26 23:48:51 node03 192.168.50.7

2016/10/26 23:48:52 [DEBUG] memberlist: Failed UDP ping: node03 (timeout reached)

2016/10/26 23:48:53 [INFO] memberlist: Suspect node03 has failed, no acks received

2016/10/26 23:48:53 Members:

2016/10/26 23:48:53 node02 192.168.50.10

2016/10/26 23:48:53 node01 192.168.50.6

2016/10/26 23:48:53 node03 192.168.50.7

2016/10/26 23:48:54 [INFO] memberlist: Marking node03 as failed, suspect timeout reached (1 peer confirmations)

2016/10/26 23:48:54 [DEBUG] memberlist: Failed UDP ping: node03 (timeout reached)

2016/10/26 23:48:55 [INFO] memberlist: Suspect node03 has failed, no acks received

2016/10/26 23:48:55 Members:

2016/10/26 23:48:55 node01 192.168.50.6

2016/10/26 23:48:55 node02 192.168.50.10

We can see that node01 and node02 both mark that node03 is suspect, because it’s not ack-ed in the last 2 seconds. Both 01 and 02 ask each other if they can reach 03, but they can’t, not because of a network partition, but because it’s not running. They mark it as suspect. Then when it continues to be unresponsive, the two remaining nodes exchange their state and confirm; “Marking node03 as failed, suspect timeout reached (1 peer confirmations)”

These timeouts and counts are based on the following configuration:

func DefaultLocalConfig() *Config {

conf := DefaultLANConfig()

conf.TCPTimeout = time.Second

conf.IndirectChecks = 1

conf.RetransmitMult = 2

conf.SuspicionMult = 3

conf.PushPullInterval = 15 * time.Second

conf.ProbeTimeout = 200 * time.Millisecond

conf.ProbeInterval = time.Second

conf.GossipInterval = 100 * time.Millisecond

return conf

}

There are a couple of settings that are pretty simple; TCPTimeout is just a timeout when connecting through TCP, and the GossipInterval is how long the node will wait before exchanging state information with the other nodes. But some of the others are more important.

IndirectChecks - How many other nodes will ping when doing an indirect check.

RetransmitMult - Retransmit a message to RetransmitMult * log(N+1) nodes, to ensure that messages are propagated through the entire cluster.

SuspicionMult - Suspect a node for SuspicionMult * log(N+1) * Interval.

In a production scenario it is likely that we would want a node to be a little more thorough when investigating suspicions that another node has failed, and so the number of `IndirectChecks` would be a little higher.

If you’re interested in playing around with the example yourself, you can find the source code at github.com/vogtb/writing-swim-member